Visual Processing in Natural Settings

Most of our knowledge about neuronal processing in the early visual pathways is based on studies in anesthetized or immobilized subjects in order to stabilize eye movements. These experimental setups introduce unnatural conditions that may limit the applicability of results to the real world. Furthermore, most studies rely on simplistic and artificial stimuli, such as sinusoidal gratings, to test visual responses. These stimuli belie the complexity of natural scenes, which are characterized by higher-order spatial correlations and complex power spectra. As a result of this simplification, we may miss information about how the brain processes natural scenes. Using advanced technologies like 3D printing and 3D motion tracking software, this project estimates the difference in how neuronal signals encode naturalistic versus simplistic stimuli.

We designed a new experimental paradigm in which subjects freely move in an environment covered with images of nature, while closely monitoring subjects’ gaze and body position (using motion capture) to create a 3D movie of subjects’ visual experience. This movie is later presented to the same subject in a more typical simplistic experimental setup. By keeping all experimental parameters constant with the exception of varying behavioral state, we can explore how the visual brain differentially processes visual information in naturalistic versus simplistic conditions.

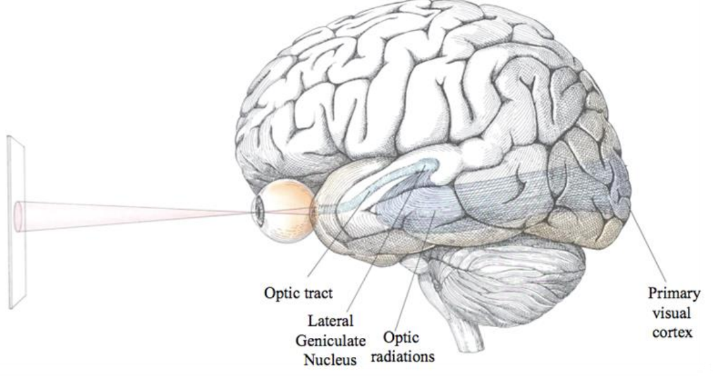

Early visual processing pathways

A typical visual stimulus, a sinusoidal grating (A) compared to a natural scene image (B) with its accompanying power spectrum (C).