Current Projects

Multisensory Integration & Learning

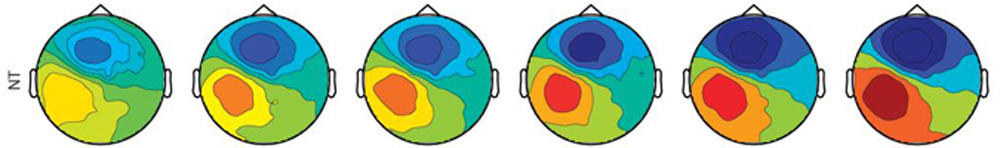

Sensory integration occurs at several temporal stages of cortical processing and involves a distributed network of brain regions. Characterizing such temporal stages and defining their functional significance is crucial to understand the neurophysiological processes that govern multisensory integration. We have introduced a three-stage temporal model for multisensory object representation that provides an elementary framework for our investigations.

Our overarching goal with MSI related projects is a rigorous test of the predictions of this model, which will provide us with an understanding of how multisensory object associations are represented, how fast these associations can be established and how plastic the multisensory system is. We will also ask at what level of the sensory integration hierarchy cognitive factors such as spatial and object-based attention are capable of operating.

Adolescent Brain Cognitive Development – ABCD

The Adolescent Brain Cognitive Development (ABCD) study is the largest long-term study of brain development and child health in the United States. The National Institutes of Health (NIH) have funded leading researchers in the fields of adolescent development and neuroscience to conduct this ambitious project. The ABCD Research Consortium consists of a Coordinating Center, a Data Analysis and Informatics Center, and 21 research sites across the country, of which the CNL-R is one.

The study goal is to follow approximately 10,000 children starting at ages 9-10 for 10 years, through the critical period of adolescent development. Researchers will track their biological and behavioral development and use cutting-edge technology and neuroimaging methods to determine how childhood experiences (such as sports, videogames, social media, unhealthy sleep patterns, and smoking) interact with each other and with a child’s changing biology to affect brain development and social, behavioral, academic, health, and other outcomes.

The study goal is to follow approximately 10,000 children starting at ages 9-10 for 10 years, through the critical period of adolescent development. Researchers will track their biological and behavioral development and use cutting-edge technology and neuroimaging methods to determine how childhood experiences (such as sports, videogames, social media, unhealthy sleep patterns, and smoking) interact with each other and with a child’s changing biology to affect brain development and social, behavioral, academic, health, and other outcomes.

Lysosomal Storage Disorders – Niemann-Pick and Batten Disease

Lysosomal storage disorders are a class of metabolic diseases that are characterized by enzyme deficiencies resulting in the abnormal targeting or delivery of toxic metabolites to the lysosome, the cellular structure responsible for degrading and removing a cells waste products. The CNL is currently investigating two such rare diseases, Batten Disease and Neimann-Pick type C, which result in marked neurological pathology.

The CNL collaborates closely with the University of Rochester Batten Center (URBC, led by Drs. John Mink & Erica Augustine) which has been designated a Batten Center for Excellence by the BDSRA, the largest Batten Disease research and support organization in North America. Through this collaboration the CNL-R is able to meet and perform studies with individuals afflicted with an extremely rare disease, with only ~500-1000 known cases in the United States, and few thousand across the world.

Current experiments focus on the extent to which well described cognitive processes are effected by Batten disease and Neimann Pick type C, with the goal to provide a deeper understanding of how these brains are impacted by their respective disease processes, whether particular pathways or processes are uniquely disrupted, and to provide objective measures of disease progression, a critical component in assessing the therapeutic value of targeted interventions.

Rett Syndrome

Rett syndrome results from a mutation in a gene on the X chromosome, called MECP2, and thus is generally sex-linked. A very interesting aspect of Rett is that the MECP2 mutation is usually not inherited from a parent, 95% of the time it represents a sporadic de novo mutation that likely occurs during gametogenesis. Rett’s is characterized by a period of typical development during the first 6-18 months of life, after which point acquired motor and language skills regress, often abruptly. Following the first years of life the progression of Rett disease is highly variable, but generally involve a period of many years where individuals suffer from apraxia, motor problems, seizures, and are largely non-communicative.

Current research at the CNL focuses on developing cognitive biomarkers to provide insights to the brain pathways affected by the syndrome, helping researchers and families alike understand the cognitive capabilities of individuals who are not able to produce the precise movements required for speech and communication. Very critically, our work also aims to provide objective measures of disease progression, to help gauge the efficacy of targeted therapies which are currently being pursued by many groups around the world.

Inhibitory Control in HIV+ Abstinent Cocaine Users

Combined HIV+ serostatus and cocaine use poses a serious personal and public health risk. Cocaine accelerates the transition from HIV+ to AIDS, possibly due to reduced HIV medication adherence in substance-abusing individuals. In addition, HIV- individuals who currently abuse cocaine frequently practice high-risk sexual behaviors such as inconsistent condom usage.

However, it is unknown whether risky decision-making in the cohort of HIV+ individuals with a history of drug dependence continues after prolonged drug abstinence. Furthermore, while recent research on former cocaine users suggests normalization of electrophysiological responses associated with inhibitory control, it is unknown whether this recovery trajectory persists in the presence of HIV.

Our research utilizes task-based neuroimaging methodologies to elucidate the combined effect of substance use and HIV on inhibitory control, a behavioral construct implicated in drug relapse. The outcome of our research will provide insight into the neural-basis of relapse risk in this vulnerable and understudied population.

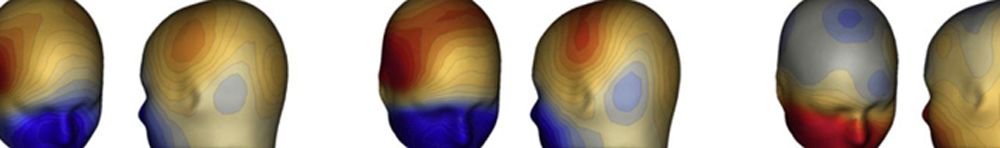

Mobile Brain Body Imaging

The Mobile Brain/Body Imaging (MoBI) approach represents a new frontier in the assessment of cognitive function by using high density electroencephalography (EEG) while participants perform tasks requiring the allocation of neural resources while also walking on a treadmill. Most traditional designs have used a minimal behavior approach to diminish contamination of EEG recordings resulting from movements, a concern arising when participants engage in more complex real-world behaviors such as walking. Participants are typically asked to sit still, and limit eye and head movements. However, the minimal approach puts severe restrictions on the types of behaviors that can be investigated. Importantly, it neglects the fact that a primary function of perception and cognition is to support active goal-directed and complex motor behavior in an ever-changing 3D environment. Using MoBI, we can present a cognitive “stress test” to determine the ability of people to allocate and reallocate cognitive resources appropriately in real-world situations. This cutting-edge approach can reveal previously masked susceptibility to falling in adults as they get older, cognitive decline associated with the onset of mild cognitive impairment and Alzheimer’s disease, and track cognitive effects of mild traumatic brain injury ( e.g. sports concussions), to list a few applications. The implementation of this technology in the Cognitive Neurophysiology Lab at the University of Rochester places us squarely at the forefront of this growing field and enables us to help direct the future of research, evaluation, diagnosis and rehabilitation in panoply of critical areas related to human health and well-being.

Current MOBI Study Projects

- Schizophrenia spectrum disorders (SSDs) are chronic, debilitating psychiatric disorders. These multi-dimensional disorders are characterized by impulsivity and deficiencies in planned behavior (e.g., making more risky decisions, not wearing clothes appropriate for the weather outside). Impaired impulse control is typically the first symptom in the cognitive executive functioning domain to become apparent in people with SSDs, even before more visible symptoms manifest. Commonly prescribed antipsychotic medications do not significantly improve cognitive and negative symptoms, but rather positive symptoms. We want to better understand cognitive-motor processes in the brain during response inhibition in people with and without SSDs when they are either walking or sitting. Physical activity (walking) and the resultant increase in functional connectivity between the default mode network and frontal executive control networks may ameliorate impulsivity and cognitive performance in individuals with SSDs. Findings could allow for future establishment of an acute walking exercise regimen geared towards improving cognitive functioning and impulsivity in individuals with SSDs.